Announcing new journal article: “Why Parents Help Their Children Lie to Facebook About Age: Unintended Consequences of the ‘Children’s Online Privacy Protection Act'” by danah boyd, Eszter Hargittai, Jason Schultz, and John Palfrey, First Monday.

Announcing new journal article: “Why Parents Help Their Children Lie to Facebook About Age: Unintended Consequences of the ‘Children’s Online Privacy Protection Act'” by danah boyd, Eszter Hargittai, Jason Schultz, and John Palfrey, First Monday.

“At what age should I let my child join Facebook?” This is a question that countless parents have asked my collaborators and me. Often, it’s followed by the following: “I know that 13 is the minimum age to join Facebook, but is it really so bad that my 12-year-old is on the site?”

While parents are struggling to determine what social media sites are appropriate for their children, government tries to help parents by regulating what data internet companies can collect about children without parental permission. Yet, as has been the case for the last decade, this often backfires. Many general-purpose communication platforms and social media sites restrict access to only those 13+ in response to a law meant to empower parents: the Children’s Online Privacy Protection Act (COPPA). This forces parents to make a difficult choice: help uphold the minimum age requirements and limit their children’s access to services that let kids connect with family and friends OR help their children lie about their age to circumvent the age-based restrictions and eschew the protections that COPPA is meant to provide.

In order to understand how parents were approaching this dilemma, my collaborators — Eszter Hargittai (Northwestern University), Jason Schultz (University of California, Berkeley), John Palfrey (Harvard University) — and I decided to survey parents. In many ways, we were responding to a flurry of studies (e.g. Pew’s) that revealed that millions of U.S. children have violated Facebook’s Terms of Service and joined the site underage. These findings prompted outrage back in May as politicians blamed Facebook for failing to curb underage usage. Embedded in this furor was an assumption that by not strictly guarding its doors and keeping children out, Facebook was undermining parental authority and thumbing its nose at the law. Facebook responded by defending its practices — and highlighting how it regularly ejects children from its site. More controversially, Facebook’s founder Mark Zuckerberg openly questioned the value of COPPA in the first place.

While Facebook has often sparked anger over its cavalier attitudes towards user privacy, Zuckerberg’s challenge with regard to COPPA has merit. It’s imperative that we question the assumptions embedded in this policy. All too often, the public takes COPPA at face-value and politicians angle to build new laws based on it without examining its efficacy.

Eszter, Jason, John, and I decided to focus on one core question: Does COPPA actually empower parents? In order to do so, we surveyed parents about their household practices with respect to social media and their attitudes towards age restrictions online. We are proud to release our findings today, in a new paper published at First Monday called “Why parents help their children lie to Facebook about age: Unintended consequences of the ‘Children’s Online Privacy Protection Act’.” From a national sample of 1,007 U.S. parents who have children living with them between the ages of 10-14 conducted July 5-14, 2011, we found:

- Although Facebook’s minimum age is 13, parents of 13- and 14-year-olds report that, on average, their child joined Facebook at age 12.

- Half (55%) of parents of 12-year-olds report their child has a Facebook account, and most (82%) of these parents knew when their child signed up. Most (76%) also assisted their 12-year old in creating the account.

- A third (36%) of all parents surveyed reported that their child joined Facebook before the age of 13, and two-thirds of them (68%) helped their child create the account.

- Half (53%) of parents surveyed think Facebook has a minimum age and a third (35%) of these parents think that this is a recommendation and not a requirement.

- Most (78%) parents think it is acceptable for their child to violate minimum age restrictions on online services.

The status quo is not working if large numbers of parents are helping their children lie to get access to online services. Parents do appear to be having conversations with their children, as COPPA intended. Yet, what does it mean if they’re doing so in order to violate the restrictions that COPPA engendered?

One reaction to our data might be that companies should not be allowed to restrict access to children on their sites. Unfortunately, getting the parental permission required by COPPA is technologically difficult, financially costly, and ethically problematic. Sites that target children take on this challenge, but often by excluding children whose parents lack resources to pay for the service, those who lack credit cards, and those who refuse to provide extra data about their children in order to offer permission. The situation is even more complicated for children who are in abusive households, have absentee parents, or regularly experience shifts in guardianship. General-purpose sites, including communication platforms like Gmail and Skype and social media services like Facebook and Twitter, generally prefer to avoid the social, technical, economic, and free speech complications involved.

While there is merit to thinking about how to strengthen parent permission structures, focusing on this obscures the issues that COPPA is intended to address: data privacy and online safety. COPPA predates the rise of social media. Its architects never imagined a world where people would share massive quantities of data as a central part of participation. It no longer makes sense to focus on how data are collected; we must instead question how those data are used. Furthermore, while children may be an especially vulnerable population, they are not the only vulnerable population. Most adults have little sense of how their data are being stored, shared, and sold.

COPPA is a well-intentioned piece of legislation with unintended consequences for parents, educators, and the public writ large. It has stifled innovation for sites focused on children and its implementations have made parenting more challenging. Our data clearly show that parents are concerned about privacy and online safety. Many want the government to help, but they don’t want solutions that unintentionally restrict their children’s access. Instead, they want guidance and recommendations to help them make informed decisions. Parents often want their children to learn how to be responsible digital citizens. Allowing them access is often the first step.

Educators face a different set of issues. Those who want to help youth navigate commercial tools often encounter the complexities of age restrictions. Consider the 7th grade teacher whose students are heavy Facebook users. Should she admonish her students for being on Facebook underage? Or should she make sure that they understand how privacy settings work? Where does digital literacy fit in when what children are doing is in violation of websites’ Terms of Service?

At first blush, the issues surrounding COPPA may seem to only apply to technology companies and the government, but their implications extend much further. COPPA affects parenting, education, and issues surrounding youth rights. It affects those who care about free speech and those who are concerned about how violence shapes home life. It’s important that all who care about youth pay attention to these issues. They’re complex and messy, full of good intention and unintended consequences. But rather than reinforcing or extending a legal regime that produces age-based restrictions which parents actively circumvent, we need to step back and rethink the underlying goals behind COPPA and develop new ways of achieving them. This begins with a public conversation.

We are excited to release our new study in the hopes that it will contribute to that conversation. To read our complete findings and learn more about their implications for policy makers, see “Why Parents Help Their Children Lie to Facebook About Age: Unintended Consequences of the ‘Children’s Online Privacy Protection Act'” by danah boyd, Eszter Hargittai, Jason Schultz, and John Palfrey, published in First Monday.

To learn more about the Children’s Online Privacy Protection Act (COPPA), make sure to check out the Federal Trade Commission’s website.

(Versions of this post were originally written for the Huffington Post and for the Digital Media and Learning Blog.)

Image Credit: Tim Roe

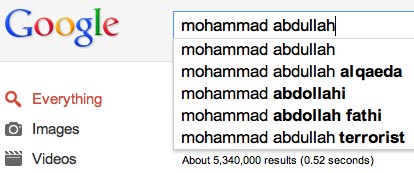

You’re a 16-year-old Muslim kid in America. Say your name is Mohammad Abdullah. Your schoolmates are convinced that you’re a terrorist. They keep typing in Google queries likes “is Mohammad Abdullah a terrorist?” and “Mohammad Abdullah al Qaeda.” Google’s search engine learns. All of a sudden, auto-complete starts suggesting terms like “Al Qaeda” as the next term in relation to your name. You know that colleges are looking up your name and you’re afraid of the impression that they might get based on that auto-complete. You are already getting hostile comments in your hometown, a decidedly anti-Muslim environment. You know that you have nothing to do with Al Qaeda, but Google gives the impression that you do. And people are drawing that conclusion. You write to Google but nothing comes of it. What do you do?

You’re a 16-year-old Muslim kid in America. Say your name is Mohammad Abdullah. Your schoolmates are convinced that you’re a terrorist. They keep typing in Google queries likes “is Mohammad Abdullah a terrorist?” and “Mohammad Abdullah al Qaeda.” Google’s search engine learns. All of a sudden, auto-complete starts suggesting terms like “Al Qaeda” as the next term in relation to your name. You know that colleges are looking up your name and you’re afraid of the impression that they might get based on that auto-complete. You are already getting hostile comments in your hometown, a decidedly anti-Muslim environment. You know that you have nothing to do with Al Qaeda, but Google gives the impression that you do. And people are drawing that conclusion. You write to Google but nothing comes of it. What do you do?