(This was originally posted on NewCo Shift.)

A friend of mine worked for an online dating company whose audience was predominantly hetero 30-somethings. At some point, they realized that a large number of the “female” accounts were actually bait for porn sites and 1–900 numbers. I don’t remember if users complained or if they found it themselves, but they concluded that they needed to get rid of these fake profiles. So they did.

And then their numbers started dropping. And dropping. And dropping.

Trying to understand why, researchers were sent in. What they learned was that hot men were attracted to the site because there were women that they felt were out of their league. Most of these hot men didn’t really aim for these ultra-hot women, because they felt like they would be inaccessible, but they were happy to talk with women who they saw as being one rung down (as in actual hot women). These hot women, meanwhile, were excited to have these hot men (who they saw as equals) on the site. These also felt that, since there were women hotter than them, that this was a site for them. When they removed the fakes, the hot men felt the site was no longer for them. They disappeared. And then so did the hot women. Etc. The weirdest part? They reintroduced decoy profiles (not as redirects to porn but as fake women who just didn’t respond) and slowly folks came back.

Why am I telling you this story? Fake accounts and bots on social media are not new. Yet, in the last couple of weeks, there’s been newfound hysteria around Twitter bots and fake accounts. I find it deeply problematic that folks are saying that having fake followers is inauthentic. This is like saying that makeup is inauthentic. What is really going on here?

From Fakesters to Influencers

From the earliest days of Friendster and MySpace, people liked to show how cool they were by how many friends they had. As Alice Marwick eloquentlydocumented, self-branding and performing status were the name of the gamefor many in the early days of social media. This hasn’t changed. People made entire careers out of appearing to be influential, not just actually being influential. Of course a market emerged around this so that people could buy and sell followers, friends, likes, comments, etc. Indeed, standard practice, especially in the wink-nudge world of Instagram, where monetized content is the game and so-called organic “macroinfluencers” can easily double their follower size through bots are more than happily followed by bots, paid or not.

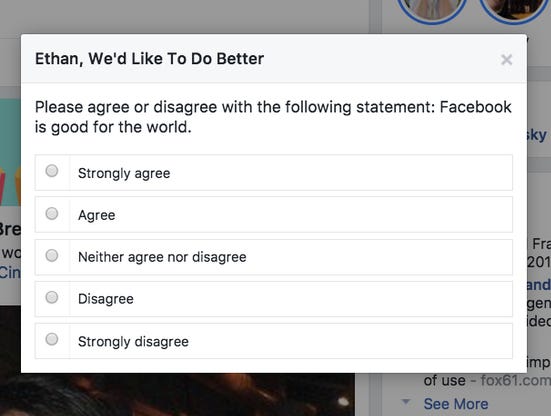

Some sites have tried to get rid of fake accounts. Indeed, Friendster played whack-a-mole with them, killing off “Fakesters” and any account that didn’t follow their strict requirements; this prompted a mass exodus. Facebook’s real-name policy also signaled that such shenanigans would not be allowed on their site, although shhh…. lots of folks figured out how to have multiple accounts and otherwise circumvent the policy.

And let’s be honest — fake accounts are all over most online dating profiles. Ashley Madison, anyone?

Bots, Bots, Bots

Bots have been an intrinsic part of Twitter since the early days. Following the Pope’s daily text messaging services, the Vatican set up numerous bots offering Catholics regular reflections. Most major news organizations have bots so that you can keep up with the headlines of their publications. Twitter’s almost-anything-goes policy meant that people have built bots for all sorts of purposes. There are bots that do poetry, ones that argue with anti-vaxxers about their beliefs, and ones that call out sexist comments people post. I’m a big fan of the @censusAmericans bot created by FiveThirtyEight to regularly send out data from the Census about Americans.

Over the last year, sentiment towards Twitter’s bots has become decidedly negative. Perhaps most people didn’t even realize that there were bots on the site. They probably don’t think of @NYTimes as a bot. When news coverage obsesses over bots, they primarily associate the phenomenon with nefarious activities meant to seed discord, create chaos, and do harm. It can all be boiled down to: Russian bots. As a result, Congress saw bots as inherently bad and journalists keep accusing Twitter of having a “bot problem” without accounting for how their stories appear on Twitter through bots.

Although we often hear about the millions and millions of bots on Twitter as though they’re all manipulative, the stark reality is that bots can be quite fun. I had my students build Twitter bots to teach them how these things worked — they had a field day, even if they didn’t get many followers.

Of course, there are definitely bots that you can buy to puff up your status. Some of them might even be Russian built. And here’s where we get to the crux of the current conversation.

Buying Status

People buy bots to increase their number of followers, retweets, and likes in order to appear cooler than they are. Think of this as mascara for your digital presence. While plenty of users are happy chatting away with their friends without their makeup on, there’s an entire class of professionals who feel the need to be dolled up and giving the best impression possible. It’s a competition for popularity and status, marked by numbers.

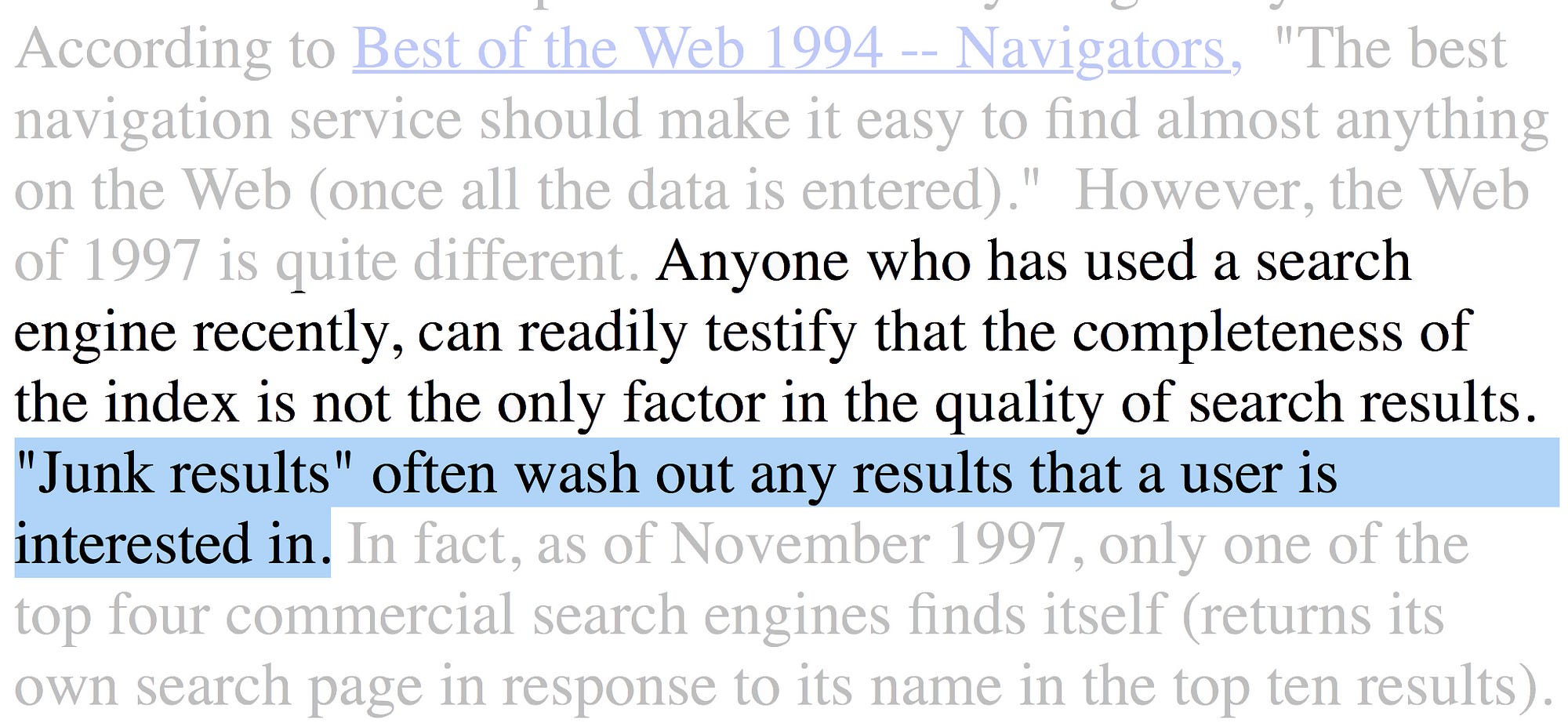

Number games are not new, especially not in the world of media. Take a well-established firm like Nielsen. Although journalists often uncritically quote Nielsen numbers as though they are “fact,” most people in the ad and media business know that they’re crap. But they’ve long been the best crap out there. And, more importantly, they’re uniform crap so businesses can make predictable decisions off of these numbers, fully aware that they might not be that accurate. The same has long been true of page views and clicks. No major news organization should take their page views literally. And yet, lots of news agencies rank their reporters based on this data.

What makes the purchasing of Twitter bots and status so nefarious? The NYTimes story suggests that doing so is especially deceptive. Their coverage shamed Twitter into deleting a bunch of Twitter accounts, outing all of the public figures who had bought bots. It almost felt like a discussion of who had gotten Botox.

Much of this recent flurry of coverage suggests that the so-called bot problem is a new thing that is “finally” known. It boggles my mind to think that any regular Twitter user hadn’t seen automated accounts in the past. And heck, there have been services like Twitter Audit to see how many fake followers you have since at least 2012. Gilad Lotan even detailed the ecosystem of buying fake followers in 2014. I think that what’s new is that the term “bot” is suddenly toxic. And it gives us an opportunity to engage in another round of social shaming targeted at insecure people’s vanity all under the false pretense of being about bad foreign actors.

I’ve never been one to feel the need to put on a lot of makeup in order to leave the house and I haven’t been someone who felt the need to buy bots to appear cool online. But I find it deeply hypocritical to listen to journalists and politicians wring their hands about fake followers and bots given that they’ve been playing at that game for a long time. Who among them is really innocent of trying to garner attention through any means possible?

At the end of the day, I don’t really blame Twitter for giving these deeply engaged users what they want and turning a blind eye towards their efforts to puff up their status online. After all, the cosmetic industry is $55 billion. Then again, even cosmetic companies sometimes change their formulas when their products receive bad press.

Note: I’m fully aware of hypotheses that bots have destroyed American democracy. That’s a different essay. But I think that the main impact that they have had, like spam, is to destabilize people’s trust in the media ecosystem. Still, we need to contend with the stark reality that they do serve a purpose and some people do want them.

I was 19 years old when a some configuration of anonymous people came after me. They got access to my email and shared some of the most sensitive messages on an anonymous forum. This was after some of my girl friends received anonymous voice messages describing how they would be raped. And after the black and Latinx high school students I was mentoring were subject to targeted racist messages whenever they logged into the computer cluster we were all using. I was ostracized for raising all of this to the computer science department’s administration. A year later, when I applied for an internship at Sun Microsystems, an alum known for his connection to the anonymous server that was used actually said to me, “I thought that they managed to force you out of CS by now.”

I was 19 years old when a some configuration of anonymous people came after me. They got access to my email and shared some of the most sensitive messages on an anonymous forum. This was after some of my girl friends received anonymous voice messages describing how they would be raped. And after the black and Latinx high school students I was mentoring were subject to targeted racist messages whenever they logged into the computer cluster we were all using. I was ostracized for raising all of this to the computer science department’s administration. A year later, when I applied for an internship at Sun Microsystems, an alum known for his connection to the anonymous server that was used actually said to me, “I thought that they managed to force you out of CS by now.”